Hey, thanks for the heads-up.

Isn’t this problem of the OpenAPI (the JSON file) rather than client bindings? How does dropping generating OpenAPI clients solve the API incompatibility problem for non-Python languages?

Is this the {domain_href} path parameter problem we talked about on Slack the other day? Just for others, the {domain_href} path parameter is used in the API many times I believe built on something that is vaguely defined in OpenAPI. It appears that path parameters are always url-encoded, thus one cannot pass any parameter which looks like this: /api/pulp/api/v3/domains/UUID because it gets URL encoded and the result is 404. This is a known problem without any solution and it was postponed to OpenAPI 4.0 which should be coming up in 2024 (the specification, not implementations yet).

Again, how does dropping client generation solves the problem for non-Python languages?

This looks like giving up on the OpenAPI idea completely, which is sad thing to observe. Instead, what you guys should be really planning is how to fix this if you plan to stick with OpenAPI for a while. I have no clue how much work this is because I feel like I only scratched the surface with the domain href path parameter problem, I just felt I wanted to let you know.

The reason why I think giving up on this is sad to see is that it is fair to assume that once you have a OpenAPI specification, you can just generate a client and it will work. That is not the case today and definitely not the case once you drop the bindings. You mentioned that these I cryptic errors and hard to solve issues which I can confirm as I was asking you yesterday on the channel about it. The time which ill be saved may be used on giving support to the people on channels or discourse.

In my honest opinion, OpenAPI is not great as it tries to be open and portable to the degree that it is painful to work with. Also, it is REST which I personally thing is a terrible idea from the day one. I like properly designed functions, RPC-like APIs where there are many portable options. End of rant. Sigh.

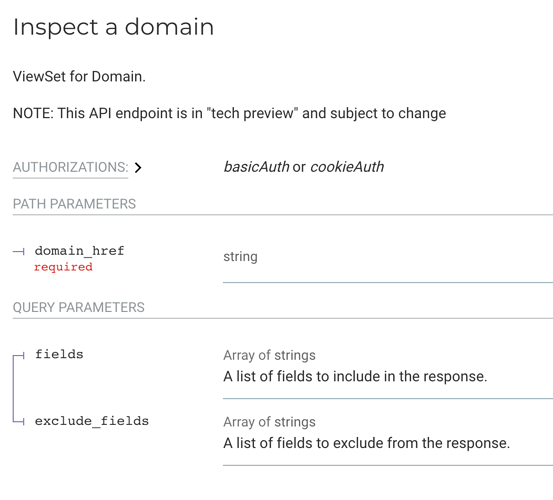

Now, it is what it is. This is not a request for feedback, the subject is clear - you guys are dropping it. I would like to suggest something: could you put documentation together about known issues with OpenAPI and how to solve them in general. You could use the OpenAPI documentation feature itself! Just make a note for each endpoint which has the href path parameter about the URL encoding issue and that it is up to the caller to set it properly. Example of the current documentation of one of the endpoints in question:

There is literally zero information for the domain_href path parameter which is causing issues, I would appreciate a word about what to pay attention to. This will be also easier for you once people come asking about the problem - you just paste the link to the docs

Cheers.