TL;DR: With the upcoming breaking change release 3.55, we plan to stop publishing the autogenerated python and ruby bindings for pulpcore and plugins.

The “bindings” also termed as “clients” in the Pulp ecosystem are packages for different programming languages automatically generated by [0] from the auto-generated openapi schema. To this day, we publish these packages for pulpcore and most of the plugins with every release in python and ruby.

So why should we stop doing so? Well, there are some serious issues with this approach:

- The bindings are tightly coupled to the very version of the pulp component they were generated from. Even if it may work on occasions, there is no guarantee (and we had reported issues in the past), that the same installation of these clients can talk to servers running different versions of the plugin.

- Even if you have a way to always match the version of the servers plugin with the client installations bindings version, as soon as the pulpcore version the plugin bindings were created with does not match your servers installation, all bets are off.

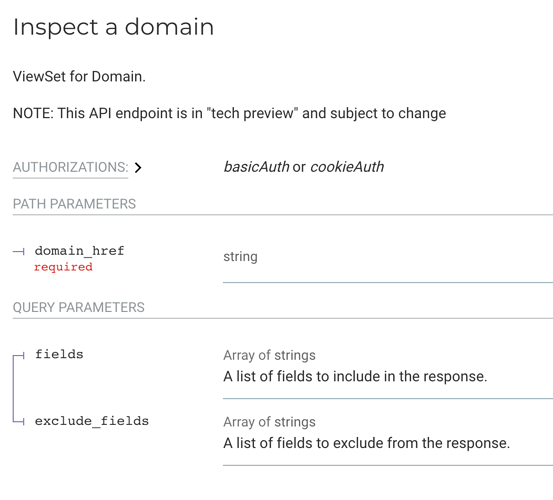

- There are some (at least two) settings for Pulp (rerouting and domains) that lead to significant changes in the api schema. Once you use these features, the bindings generated without them stop working.

- On the more subtle side, the bindings do not complain that you are using an incompatible thing. They just fail with cryptic error messages, or, even worse, fail silently.

All in all, we see no way to publish generic bindings that serve in all the various possible combinations, nor that we publish individual ones for all of these.

But you still want to use the bindings? Apart from that being very clunky to be used in my opinion anyway, you can. The only thing we ask you to do, is to generate the matching bindings for your specific installation (be it a ready server, or a single set of published packages with only one version of each plugin [see settings issue here]) yourself. All the documentation needed should be found in [0].

If you are working on a python tool (or a language able to call into python) that needs to communicate with Pulp, I recommend looking into pulp-glue [1]. That library is handcrafted, handles tasks for you and knows how to deal with different versions of Pulp and plugins.

Sorry to have this on a rather short notice (we expect 3.55 in a small number of weeks), but you know you should not have depended on the published bindings anyway, right?

[0] https://github.com/pulp/pulp-openapi-generator

[1] https://staging-docs.pulpproject.org/pulp-glue/docs/dev/learn/architecture/