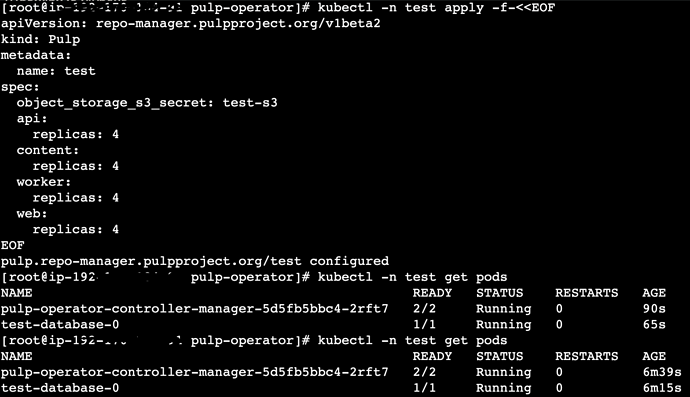

Nice! Glad to know that it worked

Initially I did not add it as EKS & S3 are In AWS and I assumed that keys are not needed.

Unfortunately, we don’t have such a level of integration yet so we need to pass the credentials manually.

Now, do we have a way to use external PostgreSQL & Redis instead of using a containerised PostgreSQL & Redis?

Yes, we do. You can create a secret pointing to the external databases in this case.

- create a secret for postgres:

kubectl -ntest create secret generic external-database \

--from-literal=POSTGRES_HOST=my-postgres-host.example.com \

--from-literal=POSTGRES_PORT=5432 \

--from-literal=POSTGRES_USERNAME=pulp-admin \

--from-literal=POSTGRES_PASSWORD=password \

--from-literal=POSTGRES_DB_NAME=pulp \

--from-literal=POSTGRES_SSLMODE=prefer

- create a secret for redis (make sure to define all the keys (REDIS_HOST, REDIS_PORT, REDIS_PASSWORD, REDIS_DB) even if Redis cluster has no authentication, like in this example):

kubectl -ntest create secret generic external-redis \

--from-literal=REDIS_HOST=my-redis-host.example.com \

--from-literal=REDIS_PORT=6379 \

--from-literal=REDIS_PASSWORD="" \

--from-literal=REDIS_DB=""

- update Pulp CR with them:

kubectl -ntest patch pulp test --type=merge -p '{"spec": {"database": {"external_db_secret": "external-database"}, "cache": {"enabled": true, "external_cache_secret": "external-redis"} }}'

pulp-operator should notice the new settings and start to redeploy the pods.

After all the pods get into a READY state you can verify the status through:

kubectl -ntest exec deployment/test-api -- curl -sL localhost:24817/pulp/api/v3/status | jq '{"postgres": .database_connection, "redis": .redis_connection}'

{

"postgres": {

"connected": true

},

"redis": {

"connected": true

}

}

Here are the docs for more information:

https://docs.pulpproject.org/pulp_operator/configuring/database/#configuring-pulp-operator-to-use-an-external-postgresql-installation

https://docs.pulpproject.org/pulp_operator/configuring/cache/#configuring-pulp-operator-to-use-an-external-redis-installation