Problem:

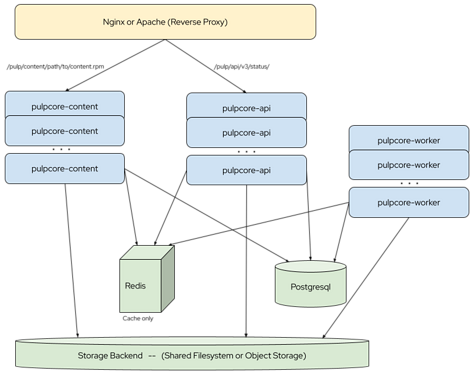

As per this topology:

I recently noticed that API, worker and content processes are stateless so I can provision let’s say two EC2 instances with this processes running and all of them will connect to the same Postgres DB and also share content from the same S3 bucket, without causing any data corruption right?

Given this architecture, I tried to install but documentation is no clear on some points regarding this way of deploying pulp, specifically I am not sure if all this PyPi process can be run on each EC2 instance following exact same steps since some of them seem to modify data like migrations for example. I am following PyPi install method from Instructions — Pulp Project 3.53.0 documentation, so my questions are:

- Django SECRET_KEY value can be specific to each server? so I can run install process on each EC2 with a different randomly generated SECRET_KEY?

- I assume DB_ENCRYPTION_KEY must be the SAME across all EC2 instances since this is used to encrypt/decrypt data from Postgres, is this correct?

- Django Migrations can be run everytime an EC2 instance is installed? or should I only run them on the first instance I deploy??

- Django admin password can be overwritten with same value every time I install an EC2:

pulpcore-manager reset-admin-password --password - Collect Static Media for live docs and browsable API command can be also run on each instance as part of install process?

pulpcore-manager collectstatic --noinput - RPM Migrations can also be run each time an EC2 is installed?

In general since I am using my own developed ansible playbook to install pulp front end instances, I want to determine if the steps I describe before are idempotent or I must only run them on the very first instance I launch. I hope my explanation makes sense, please bear with me. Thank you!!

Expected outcome:

Pulpcore version:

“core”: “3.48.0”

Pulp plugins installed and their versions:

“versions”: {

“rpm”: “3.25.1”,

“core”: “3.48.0”,

“file”: “3.48.0”,

“certguard”: “3.48.0”

},

Operating system - distribution and version:

RHEL 9

Other relevant data: